From Object Detection to Multimodal AI: The Future of Video Intelligence

From Seeing Objects to Understanding Stories: The Rise of Multimodal AI Video Analysis

For years, AI video analytics meant one primary capability: detecting and labeling objects in video frames. Models like YOLO (You Only Look Once) and Faster R-CNN transformed computer vision by enabling fast, real-time detection with impressive accuracy.

But as industries demanded more than speed — they demanded context, explanation, and insight — traditional object detection systems reached their limits.

Identifying a person, car, or helmet is useful.

Understanding what is happening, how events unfold, and why they matter is transformative.

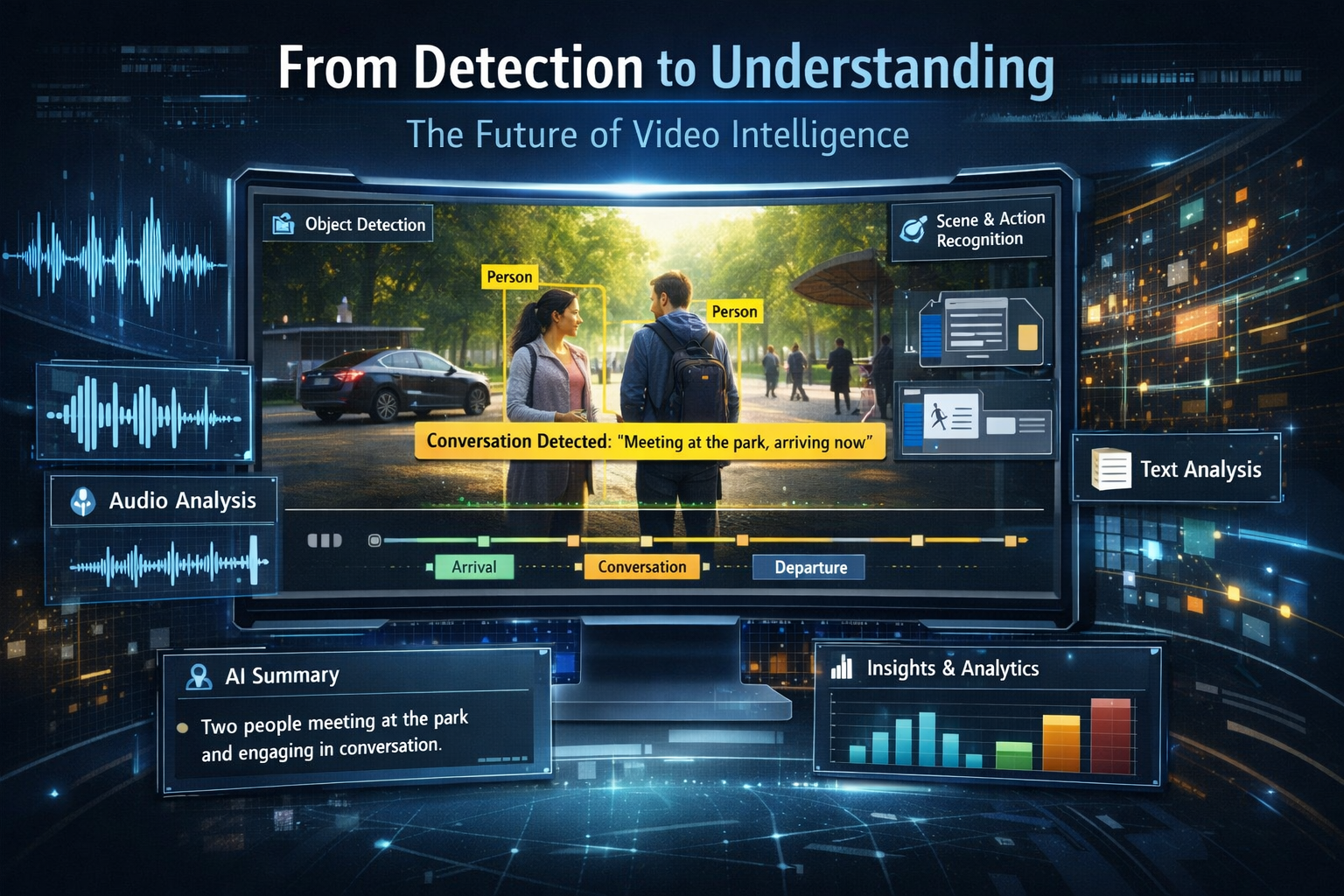

This shift marks the emergence of multimodal AI video analysis, where platforms like VideoSenseAI combine visual understanding, audio interpretation, language models, and structured analytics to convert raw footage into actionable intelligence.

From Object Detection to Scene Understanding

Traditional Object Detection

Classic object detection systems typically:

- Use convolutional neural networks (CNNs)

- Detect predefined object classes (e.g. ~80 classes in the COCO dataset)

- Output bounding boxes and confidence scores

- Perform best on short, high-quality video clips

These systems excel in use cases such as:

- Live camera monitoring

- Traffic counting

- Embedded or edge deployments

However, they lack semantic depth. They treat frames independently and cannot reliably connect:

- Objects to actions

- Actions to environments

- Sequences to outcomes

Scene Understanding with Multimodal AI

Multimodal AI platforms like VideoSenseAI move beyond isolated detections by introducing contextual reasoning.

They do this by:

- Analyzing full-length videos, not just short clips

- Using open-vocabulary and transformer-based models that adapt dynamically

- Understanding relationships and co-occurrences between objects

- Interpreting visual, audio, and temporal signals together

- Producing summaries, timelines, and structured data automatically

This evolution represents a shift from perception to comprehension — from labeling pixels to understanding stories.

What Makes Multimodal AI Fundamentally Different

A multimodal system processes multiple data types simultaneously:

- Visual data — video frames and motion

- Audio data — speech, sound events, tone

- Structured metadata — object counts, timelines, statistics

- Language understanding — AI-generated summaries and explanations

By combining vision + audio + language + time, VideoSenseAI creates a holistic understanding of what is happening inside a video — not just what appears in a single frame.

Inside a Multimodal Video Intelligence Pipeline

1) Video Ingestion

Users upload a file or paste a YouTube link. The system automatically preprocesses and optimizes the video for analysis.

2) Visual & Audio Recognition

Transformer-based models analyze frames and audio streams to detect:

- Objects and environments

- Speech and keywords

- Sound events and context

Examples include people, vehicles, safety equipment, landscapes, machinery, speech segments, and ambient sounds.

3) Structured Outputs

Results are organized into:

- Per-frame and aggregated CSV files

- Interactive charts and object distributions

- Timelines showing when objects and events occur

4) AI Summarization

Language models interpret detections across time, generating human-readable summaries that explain actions, behaviors, and key moments.

5) Visualization & Export

Users can explore dashboards, filter by object or word, generate GIFs/boomerangs, and export data for reporting or downstream analytics.

Real-World Use Cases

Security & Surveillance

Detect crowding, unusual behavior, vehicle movement, and spoken keywords — all with timestamped evidence.

Retail & Marketing

Analyze customer flow, dwell time, product interaction, and in-store behavior automatically.

Construction & Safety

Monitor PPE compliance and object co-occurrences (e.g. helmet + vest + worker) across long recordings.

Sports Analytics

Capture athletes, equipment, environment, and conditions together — providing performance context beyond raw motion.

Content Creation

Generate searchable transcripts, summaries, and highlight moments from long-form video content.

From Frame-Based Detection to Contextual Intelligence

Traditional detection models treat each frame as isolated.

Multimodal systems understand continuity over time.

For example:

- A person enters a store (event start)

- Interacts with products (behavior)

- Speaks with staff (audio context)

- Leaves the scene (event completion)

This enables behavioral, operational, and narrative analytics — the true goal of video intelligence.

Ethical and Technical Challenges

As video AI advances, important challenges arise:

- Data privacy and GDPR compliance

- Bias reduction in visual and language models

- Energy efficiency for large-scale inference

- Transparency and explainability of AI outputs

VideoSenseAI addresses these through secure data handling, configurable inference limits, and clear visual representations of detected elements and timelines.

Why Transformers Power the Next Generation

Transformer architectures — originally designed for language — now drive vision-language models such as:

- CLIP

- VideoCLIP

- GPT-4V

- LLaVA

- VideoSenseAI’s hybrid multimodal pipeline

These models align visual tokens, audio signals, and language embeddings, enabling reasoning across frames and modalities, not just within them.

Traditional Detection vs Multimodal Intelligence

| Feature | Traditional Models (YOLO, SSD) | VideoSenseAI |

|---|---|---|

| Object Classes | Fixed datasets | Adaptive, open-vocabulary |

| Audio Understanding | No | Yes |

| Output | Bounding boxes | Timelines, summaries, CSVs |

| Context Awareness | Minimal | Deep, temporal |

| Video Length | Short clips | Long videos |

| Search & Filtering | No | Yes |

| AI Summaries | No | Yes |

Conclusion: The Next Era of Video Intelligence

The future of video analysis is multimodal.

It’s not enough to detect what appears on screen. Modern systems must understand actions, relationships, audio cues, and narrative flow.

With VideoSenseAI, video becomes more than footage — it becomes searchable, analyzable, and meaningful data.

From raw video to actionable insight — VideoSenseAI turns every frame, sound, and moment into knowledge.

Check this article on how VideoSenseAI wokrs: https://videosenseai.com/blogs/turn-video-into-searchable-data/

Related Guides

If you're exploring AI-powered video intelligence, you may also find these in-depth guides useful:

- What Is a Video Search Engine? How AI Turns Video Into Searchable Data

- How to Search Inside Videos for Objects, Words & Events Using AI

These resources explain how modern video indexing works and how you can turn long footage into structured, searchable data.